Aperture Data Studio is a self-contained web-based application which runs on most java-compliant operating systems on commodity hardware. It takes full advantage of 64-bit architectures, and is multi-threaded and linearly scalable.

To avoid potential performance drops during critical operations, we strongly recommend that you ensure your anti-virus software does not check the directories containing Aperture Data Studio data files and that any system sweeps are scheduled to run outside of office hours/data loading periods.

We strongly recommend using Chrome to access the application.

See our recommendations depending on your workload:

Virtual cores refer to using a virtual machine or an instance from Azure/AWS. If using physical hardware, it would be CPU threads available to the server. Note that slower core speeds would still impact the performance but the main denominator is how many cores are available for use by Data Studio.

Has the most impact on view operations (joining, sorting, grouping, lookups, expression evaluation, etc.).

Affects sorting, grouping, profiling and the ability to support concurrent user activity/workflow execution.

We recommend that Data Studio makes use of max 16 GB (see testing results which means you might need at least 24 GB to allow for OS and other software to be able to run without impacting performance.

Affects joining, profiling, lookup index creation and, to an extent sorting and grouping.

When viewed in the Windows Task Manager, the memory usage of the Data Studio process appears to go up but never down. This is normal behavior for a Java based application. To release memory already in use by Data Studio, you can restart the service.

By default, our JVM settings will use 66% of the total system memory that's available, up to a maximum of 16 GB. This means that you might want at least 24 GB available to maximize memory utilization.

Disks have the biggest impact on load/profile times for Data Studio. You should therefore use the fastest hard drives possible, ideally Enterprise SSDs (if hosted, make them provisioned versions). We recommend using RAID-0 for data storage.

The average disk throughput is the biggest denominator (IOPS being far less of a consideration) which affects profiling, loading, exporting. For large data sets, it can also impact joining, sorting and grouping.

Database size (after load/profile) can be easily estimated. The loaded database size is around 80% and the profiled one is around 165% of the original file size.

Based on your requirements, we suggest the following Amazon Web Services: i3, c4, c5 or m4.

These AWS instances provide the best performance for Data Studio without giving an excess of CPU/RAM limits that wouldn't be required. This is while providing disks that will lower the impact of cloud-based solutions that have potentially shared resources which can cause bottlenecks for Data Studio.

For standard usage we recommend higher-end DS instances with standard (default) storage listed on the standard tier, not the basic one. For maximum performance, we recommend using the relevant premium storage quantity for your needs in conjunction with the chosen DS instance.

These Azure instances provide the best performance for Data Studio without giving an excess of CPU/RAM limits that wouldn't be required.

We strongly recommend that your antivirus software does not check the directories containing Data Studio data files, and that any system sweeps are scheduled to run outside of office hours/periods when data loading/profiling can occur. This is to avoid any performance drops that might happen during critical operations.

Recommendations below are for single users (max 2-3 concurrent users) or when processing fewer than 10 million rows of data.

| Component | Recommendation |

|---|---|

| Operating System (OS) | Windows 64-bit (10), Server 2012 64-bit, 2016 64-bit. |

| Processor (CPU) | 8 virtual cores, equivalent of an Intel Quad Core CPU. |

| Memory (RAM) | 16 GB dedicated for Aperture Data Studio usage. You may require more (e.g. 32 GB) for OS and any other software. |

| Disk (HDD, SSD, etc.) | Consumer SSDs (as fast as possible). |

Find out more about each component.

Recommendations below are for 4-10 users or when processing 10-100 million rows of data.

| Component | Recommendation |

|---|---|

| Operating System (OS) | Windows 64-bit (10), Server 2012 64-bit, 2016 64-bit. |

| Processor (CPU) | 16 virtual cores, equivalent of an Intel Quad Core CPU. |

| Memory (RAM) | 32 GB dedicated for Aperture Data Studio usage. You may require more (e.g. 48 GB) for OS and any other software. |

| Disk (HDD, SSD, etc.) | Enterprise SSDs (as fast as possible). |

Find out more about each component.

Recommendations below are for more than 10 users or when processing 100 million - 1 billion rows of data.

| Component | Recommendation |

|---|---|

| Operating System (OS) | Windows 64-bit (10), Server 2012 64-bit, 2016 64-bit. |

| Processor (CPU) | 16 virtual cores, equivalent of Dual Intel Quad Core CPUs. |

| Memory (RAM) | 128 GB dedicated for Aperture Data Studio usage. You may require more for OS and any other software. |

| Disk (HDD, SSD, etc.) | Enterprise Provisioned SSDs (as fast as possible). |

Find out more about each component.

For smaller datasets, Aperture Data Studio can be easily run on a virtual machine (VM). For larger datasets or intensive usage, it's worth taking the following into account to ensure maximum performance:

For particularly large datasets, we recommend using a dedicated server instead of a VM.

We ran a selection of pre-defined workflows on different amounts of RAM to check the optimum amount for the JVM. This then allowed us to derive more consistency for gaining comparison statistics from different instances by eliminating one of the variables that can be introduced.

We also ran a predefined suite of tests on various machines from Azure and AWS to get some basic hardware recommendations. Two machines from Azure and two machines from AWS were used. Our focus was on checking the impact on disks.

From the tests and results below, we've determined that 16 GB of RAM is optimum for all these processes as well as loading/profiling.

Workflow test

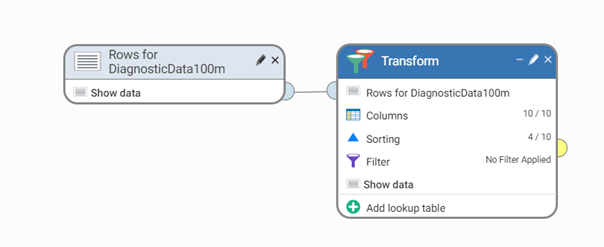

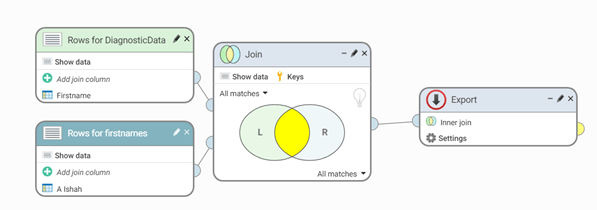

These workflows were run with a set of data that was created using our data generator. It contains 10 columns, 10 million rows and the data is fairly unique, made to resemble customer record data (Firstname, Lastname, Order ID etc.).

The aim of these workflows was to test some of the most commonly used steps, checking the performance with varying amounts of RAM. In these tests, we covered Validation, Table lookups, Transformations, Exporting, Joins and Sorting.

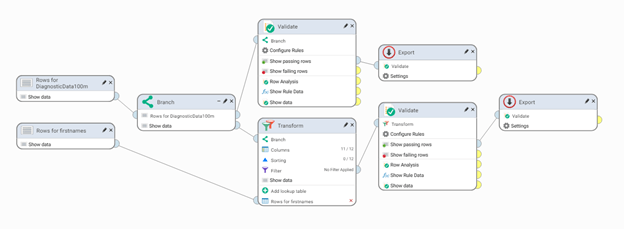

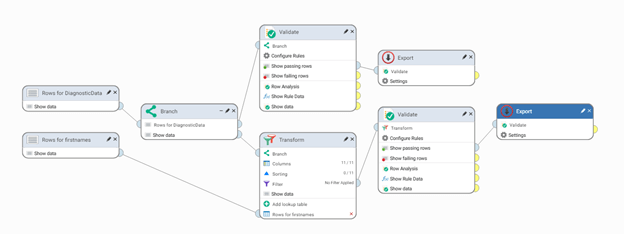

Workflow1:

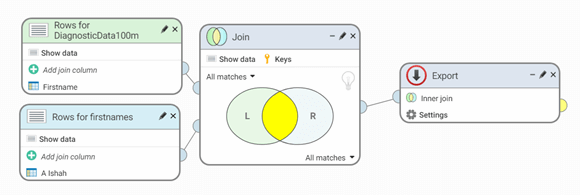

Workflow2:

Workflow3:

Test results

| RAM | 4 GB | 8 GB | 16 GB | 32 GB | 64 GB | 128 GB |

|---|---|---|---|---|---|---|

| Workflow1 | 00:28:38 | 00:28:09 | 00:28:00 | 00:28:16 | 00:28:23 | 00:28:37 |

| Workflow2 | 02:39:02 | 02:19:10 | 02:16:28 | 02:16:58 | 02:16:41 | 02:14:50 |

| Workflow3 | 00:06:00 | 00:05:58 | 00:06:03 | 00:06:10 | 00:06:10 | 00:06:41 |

On each environment, the following were run:

Load test - 100 million rows x 45 columns test file (same spec as used in load testing, generated on each machine)

Profile test - 100 million rows x 45 columns test file (same spec as used in load testing, generated on each machine)

Workflow1, run with diagnostic data (10 million rows x 10 columns) and Firstnames:

Workflow2, run with diagnostic data (10 million rows x 10 columns) and Firstnames:

Azure VM specifications

Instance1: Standard

By default, Data Studio uses an optimized maximum of 16 GB but this instance comes with the default size of 28 GB which was not changed.

Instance2: Premium

By default, Data Studio uses an optimized maximum of 16 GB but this instance comes with the default size of 28 GB which was not changed.

Test run (averages)

| Load time | Profile time | Total time | Workflow1 | Workflow2 | |

|---|---|---|---|---|---|

| Instance1 (Standard) | 02:21:15 | 03:25:57 | 05:47:12 | 00:38:07 | 02:12:06 |

| Instance2 (Premium) | 00:43:55 | 02:05:47 | 02:49:42 | 00:34:21 | 02:11:31 |

AWS VM specifications

Instance3: Standard

By default, Data Studio uses an optimized maximum of 16 GB but this instance comes with the default size of 61 GB which was not changed.

Instance4: EBS

By default, Data Studio uses an optimized maximum of 16 GB but this instance comes with the default size of 61 GB which was not changed.

Test run (averages)

| Load time | Profile time | Total time | Workflow1 | Workflow2 | |

|---|---|---|---|---|---|

| Instance3 (Standard) | 00:59:45 | 04:21:12 | 05:20:57 | 00:35:03 | 02:01:13 |

| Instance4 (EBS) | 01:04:27 | 03:24:41 | 04:29:08 | 00:33:57 | 01:59:46 |